A while ago, I published a post titled: Simple Cheat Sheet to Sprint Planning Meeting. Though I understand every team is different, I thought it would be helpful to those who are new to agile processes. People are always looking for cheat sheets, templates, and stuff like that. What is the harm of giving them what they want?

In retrospect, I think the harm is the lack of context. When people come to a training class, they are provided an ideal situation. Even the Scrum Guide was written as though you've been doing Scrum for a while. It doesn't talk about things that happen leading up to your team's first Sprint. It doesn't talk about the complexity of scaling to the enterprise. It's just vanilla.

A while ago, I published a post titled: Simple Cheat Sheet to Sprint Planning Meeting. Though I understand every team is different, I thought it would be helpful to those who are new to agile processes. People are always looking for cheat sheets, templates, and stuff like that. What is the harm of giving them what they want?

In retrospect, I think the harm is the lack of context. When people come to a training class, they are provided an ideal situation. Even the Scrum Guide was written as though you've been doing Scrum for a while. It doesn't talk about things that happen leading up to your team's first Sprint. It doesn't talk about the complexity of scaling to the enterprise. It's just vanilla.

I just got back from coaching a new team and I'll be heading back next week to see how they are doing. To get them moving forward, I facilitated release planning and sprint planning. That's where things started to get interesting. What if your team has never done release planning or sprint planning?

Release and Sprint Planning

The purpose of Release Planning is so the organization can have a roadmap that helps them reach their goals. Because a lot of what we know emerges over time, most of what we actually know is that we don't know much.

The purpose of the Sprint Planning is for the entire team to agree to complete a set of ready top-ordered product backlog items. This agreement will define the sprint backlog and is based on the team’s velocity or capacity and the length of the sprint timebox.

You're new to Scrum. You don't have a velocity (rate of delivery in previous sprints) and you are not sure of your capacity (how much work your team can handle at a sustainable pace). What do you do?

The Backlog

The product backlog can address just about anything, to include new functionality, bugs, and risks. For the purpose of sprint planning, product backlog items must be small enough to be completed (developed, tested, documented) during the sprint and can be verified that they were implemented to the satisfaction of the Product Owner.

Again, you're new to Scrum. How do you know what small enough is?

Right Sizing Backlog Items

Product backlog items determined to be too large to be completed in a sprint, based on historical data of the team, should not be considered as sprint backlog candidates during the sprint planning meeting and should be split into smaller pieces. Remember, each story must be able to stand on its own (a vertical slice). It should not be incomplete or process based (a horizontal slice).

Again, you're new to Scrum. You have no historical data.

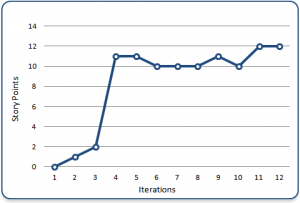

Determining Velocity

The velocity of a team is derived by summing the estimates of all completed and accepted work from the previous sprint. By tracking team velocity over time, teams focus less on utilization and more on throughput.

If you have a new team, not only do you not have a velocity, you won't have any completed work to compare your estimates to and you won't know how many deliverables to commit to. What do you do? I think you just go for it! You keep track of what you are completing and collect historical data. You get through the sprint and establish some context for future sprints.

The first few sprints are going to be pretty rocky, until the team begins to stabilize. Just ask yourself. WWDD? What would Deming Do? Plan a little; Do a little; Check what you did versus what you planned to do; Act on what you discover.